Diary Studies in the Age of AI

Can machines really help us make sense of weeks of human behavior?

Diary studies are one of the most valuable and most painful research methods. Instead of a one-off usability session, you follow participants for days or weeks, capturing their real-life experiences as they happen.

This gives you something other methods can’t: long-term patterns. You see how frustration builds, how habits form, and how people adjust when things don’t work.

The challenge? Diary studies create huge amounts of messy, unstructured data. Hundreds of notes, photos, or voice memos pile up. Analyzing all that manually takes so long that many teams skip diary studies altogether.

Now AI promises to help, clustering themes, summarizing entries, even spotting patterns researchers might miss. But here’s the truth: AI is good at speed and scale, but bad at nuance. Without human judgment, you risk clean-looking charts that miss what really matters.

In This Issue

What Diary Studies Are (and Why They’re Still Relevant)

Why Traditional Analysis Breaks Down

How AI Can Help

Where AI Falls Short

A Hybrid Research Framework: Researcher + Machine

Examples of AI in Diary Studies

The Risks of Over-Reliance on Automation

UXCON25 Spotlight: Long-Term Research in the AI Era

Resource Corner

What Diary Studies Are (and Why They’re Still Relevant)

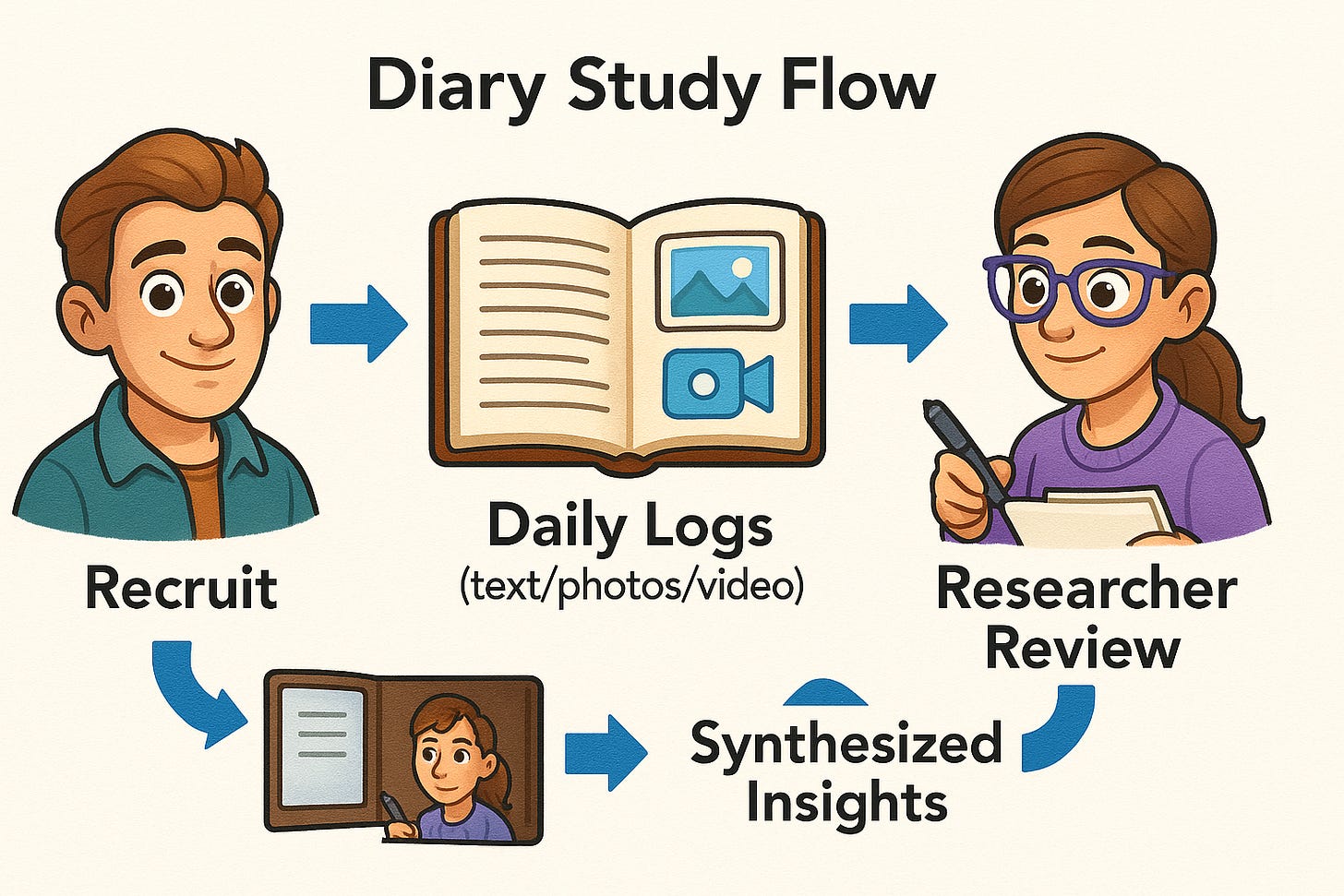

A diary study asks participants to log their experiences over time — writing a short entry, snapping a photo, or recording a voice note every day.

Example: A food app wants to know why people drop off after the first week. A two-week diary study reveals that users start strong, then stop logging meals because reminders feel nagging.

Why it matters: Unlike a usability test (snapshot), diary studies show how real-world use evolves over time.

Even as AI reshapes research, diary studies remain relevant because they capture context and change over time, things AI alone can’t invent.

Why Traditional Analysis Breaks Down

Diary studies are powerful but often fail in execution. Why?

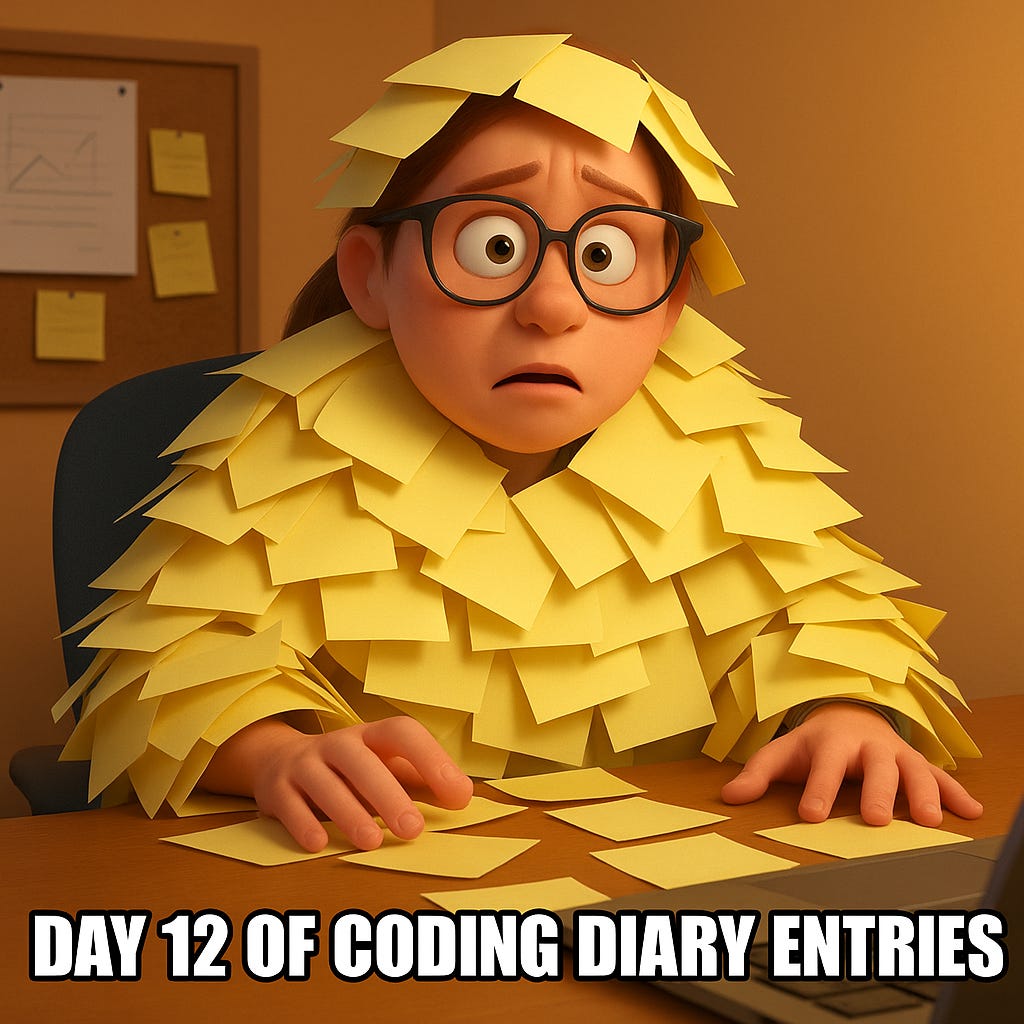

Volume overload: Even a modest study produces a flood of entries. For instance, 15 participants logging once a day for three weeks = 315 pieces of data. Add screenshots or videos, and the workload doubles.

Inconsistency: Some participants write novels, others write “fine.” Some skip days, or send off-topic content. This makes it harder to compare across people.

Manual coding pain: Traditionally, researchers tag each entry by hand (“frustration,” “trust,” “workaround”), then cluster them into themes. This process is accurate, but can take weeks, and eats up budgets and timelines.

In short: the insights are rich, but the analysis cost is high.

How AI Can Help: Coding, Clustering, Summarization

AI is well-suited to reduce the grunt work:

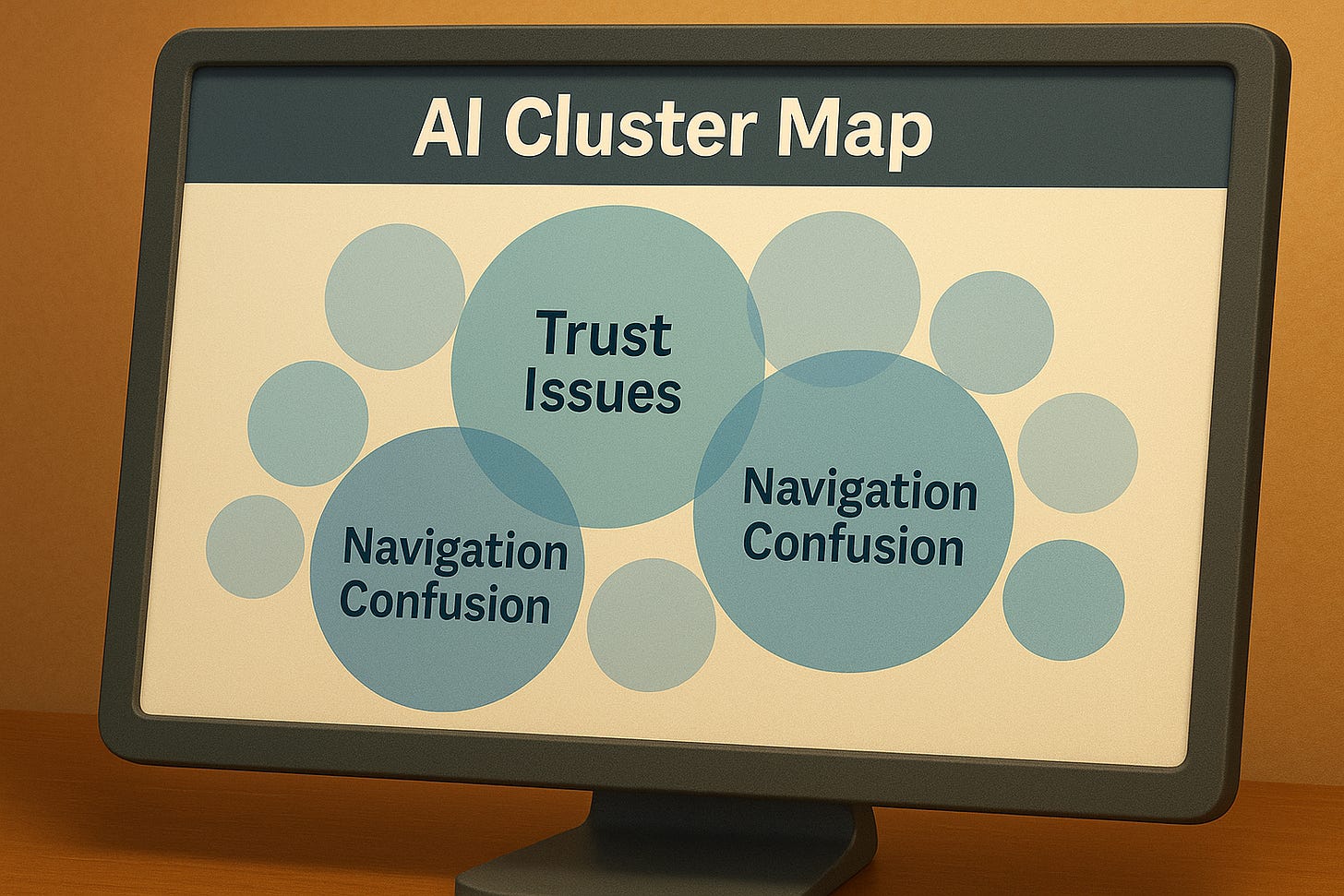

Auto-coding: AI tags entries with initial categories (e.g., “confusion,” “satisfaction,” “trust”).

Clustering: It groups similar entries across participants, surfacing common pain points.

Summarization: It generates short digests, e.g., “This week, five participants struggled with navigation.”

💡 But remember: AI doesn’t know which patterns matter for your product. It can surface repetition, but not relevance. That’s where you come in.

Where AI Falls Short (and Why Human Judgment Matters)

Context blindness: AI can’t tell if a “frustration” is trivial (“loading spinner was slow once”) or critical (“I can’t log in”).

Nuance loss: Humor, sarcasm, and cultural references often get misread. Example: “I love when the app crashes daily 🙄” → tagged as “positive sentiment.”

Ethical risk: Diary data often contains sensitive personal details. AI models need strict oversight on privacy and storage.

Bottom line: AI can accelerate, but not interpret all accurately based on the context at hand.

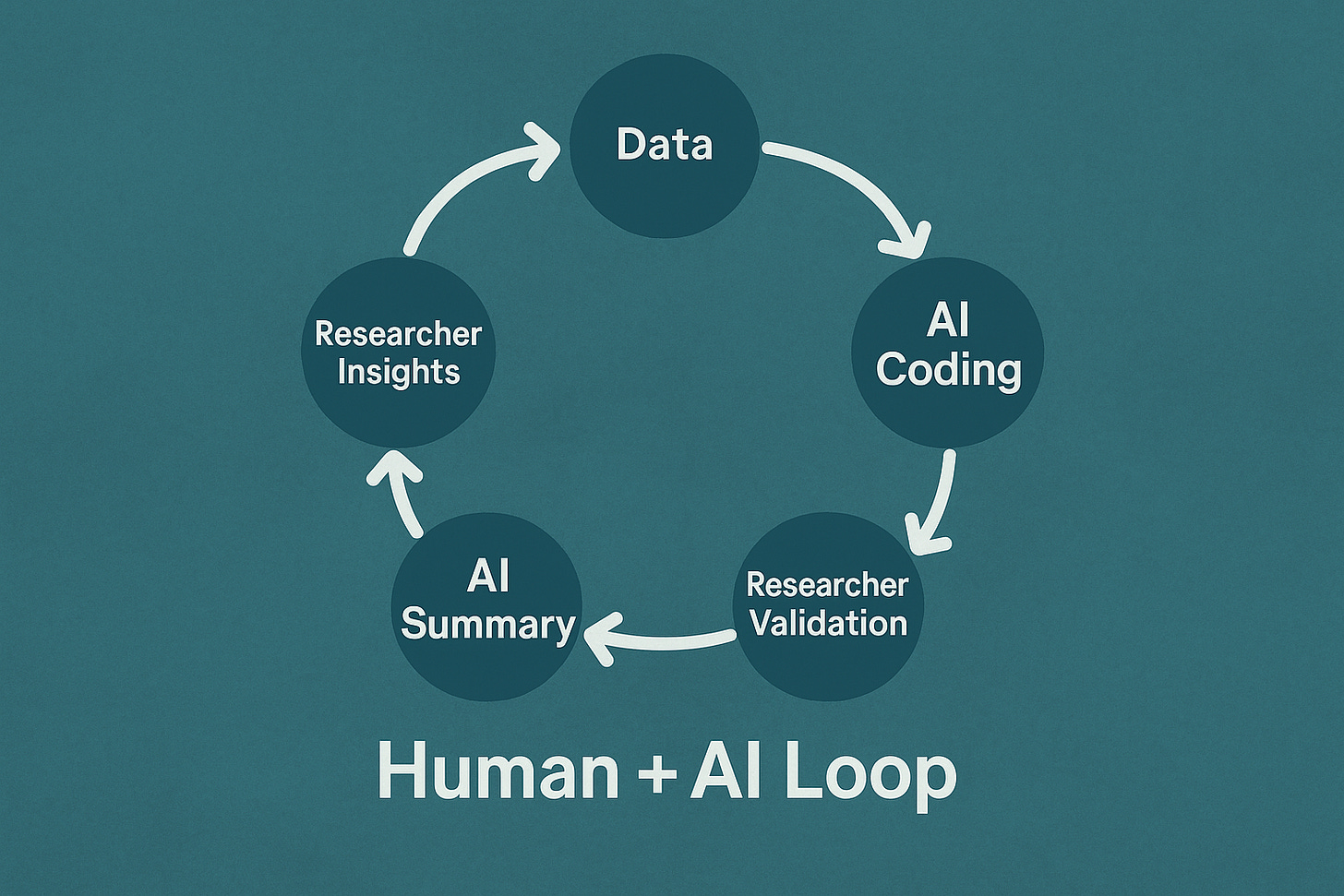

A Hybrid Research Framework: Researcher + Machine

Best practice is to use AI as a partner, not a replacement.

Collect entries normally (text, voice, or photo).

AI pass: let AI code and cluster first.

Researcher validation: merge or correct AI’s clusters, check for nuance.

Human synthesis: align findings with business questions.

AI assist: generate draft summaries, visuals, or reports.

This saves time without sacrificing credibility.

Real-World Examples of AI in Diary Studies

Healthcare: AI grouped patient logs into symptom categories. Researchers validated clusters to spot adherence gaps quickly.

Education: AI summaries of student reflections flagged engagement drops mid-week. Researchers dug deeper to find causes.

SaaS onboarding: AI grouped diary entries into “quick wins” vs. “long-term struggles.” Researchers used this to prioritize feature fixes.

In each case, AI sped up clustering, but researchers delivered meaning.

The Risks of Over-Reliance on Automation

False confidence: Clean dashboards don’t guarantee accurate insights.

Bias amplification: AI trained on biased data may over-tag certain groups or behaviors.

De-skilling: If teams rely fully on automation, they risk losing research depth over time.

UXCON25 Spotlight: ONLY 24 DAYS TO GO

Join us at UXCON25, where we’ll explore:

Case study: how AI-assisted diary studies cut analysis from 6 weeks to 10 days.

Panel: what researchers trust AI to do and what they never outsource.

Live demo: hybrid coding workflow in action.

Thanks to Great Question, tickets are now more wallet-friendly than ever.

Resource Corner

Final Thought

Diary studies reveal how people live with products over time. AI can tame the flood of data, but it cannot replace human interpretation.

Use machines to cut noise. Use researchers to extract meaning.

The future of diary studies isn’t automated, it’s augmented.